– Reading time: 13 minutes-

If you want to become a proficient hacker, one of the things you need to learn is programming. This article serves as an introduction to a series of courses that will be dedicated to the study of various programming languages. Since I believe in never taking anything for granted, today, I will explain some of the fundamental concepts that will help you approach these courses with a more informed perspective. I’ll start with how the computer works. It’s the tool that will accompany you throughout all the courses, and you’ll learn to use it like never before. I will elucidate its functioning and its most pivotal components, ultimately enabling you to fully grasp the nature of a programming language. Specifically, here’s what I’ll be discussing today:

1. Computer

A computer is a fundamentally important tool in our daily lives. It enables us to do things that were unimaginable just a century ago. But what exactly is it, and how does it work?

Without delving into too many details, we can think of a computer as a machine that waits for instructions to execute. Like a calculator, it doesn’t show anything until we ask it to perform an addition, multiplication, and so on.

I know this may sound strange, as we are used to a different idea: typically, we simply turn on the computer, and it starts doing things for us. In reality, this happens because someone has instructed it on what to do after being powered on. So, there’s someone who, in some way, has told our computer “Every time you’re turned on, prepare all your components, and then launch the operating system”.

The operating system itself (Windows, macOS, Linux, or others) is nothing more than a set of instructions. This means that, for example, to manage the startup, the creators of your operating system have made sure that your computer understands this series of commands: “1. Prepare everything you need to run the system while displaying a loading icon on the screen; 2. Display the login screen; 3. Wait for the user to enter their password; 4. Check the password, and if it’s correct, allow them in and show the desktop and all available applications” and so on.

Of course, I’m simplifying things quite a bit, but I want you to grasp the underlying concept: any application you’ve used on your computer works because someone in the past has written a program, which is a set of instructions, telling your computer what to do when that application is launched. The operating system is fundamental because it acts as an intermediary between your computer’s hardware1 and the applications running on it. That’s why it starts automatically when you power on your computer and doesn’t require manual clicking on a specific icon, as common applications do. By learning a programming language, you’ll be able to write your own program, harnessing your computer’s full potential.

Towards the end of this article, it will become clearer how you can write a program. For now, I ask you to set aside all the software2 aspects and consider only the computer itself. As I mentioned earlier, in this case, we simply have a device waiting for someone to tell it what to do, and here we return to the calculator example. Of course, a computer is much more complex than a simple calculator.

Since presenting it in detail would require an entire course, today I’ll limit our discussion to its three fundamental hardware components: primary or main memory (e.g. RAM), secondary memory or mass storage (e.g. Hard Disk or SSD), and processor (CPU). Once you understand how these three components work together, you’ll have a general overview of the basic operation of a computer. These three components, in fact, work together to make everything this machine can do today possible.

Please note that when I refer to computers, I also include smartphones and tablets because their architectures are very similar.

2. Memory

Just like when we talk about a human being, a computer’s memory is the part that allows it to remember all the information it encounters. As I mentioned earlier, we mainly have two types of memory: main memory and mass storage. The first is a type of “volatile” memory, meaning it loses all information when the computer is turned off. The second, on the other hand, is a “non-volatile” type of memory, which means that when you turn off the computer, the data stored in it will not be lost. Mass storage is used to hold files, applications, and anything that needs to be always retrievable. Main memory, on the other hand, is used to hold temporary information needed by applications to manage their execution.

At this point, you might wonder: why is this distinction necessary? Can’t the temporary information that applications need be directly managed by mass storage? Do we really need volatile memory? You should know that mass storage is generally much slower than main memory. This is a problem because users of applications expect to use a fast and responsive tool. This is why, generally, during the execution of applications, we rely on RAM while saving only important information that must not be lost to secondary memory.

To give you an example, let’s suppose we have a photo editing program. When the user opens an image within the application, the image file is loaded from mass storage into RAM. Loading the image into RAM significantly speeds up the handling of changes, keeping the user experience smooth and responsive. When the user makes changes to the image (e.g., adds filters, crops the image, or changes the color), these modifications are temporarily saved in RAM. This way, the user can immediately see the effect of the changes without applying them to the original file stored in mass storage. In other words, in this case, RAM acts as a “temporary workspace” for pending changes. When the user decides to save the image after making the changes, the application will copy the modified version from RAM to the original file on mass storage.

Well, at this point, you should have a clear understanding of how the various memories interact. However, there’s still one piece missing. Could you tell me how information is represented within memory?

3. Information Representation

Let’s say you have a document on your computer. Naturally, when you open it, you’ll see its contents as a series of characters. However, you should know that this is merely a representation that is convenient for us humans. We are accustomed to communicating in our language, so when we read information, we expect to find words we understand.

In reality, a computer doesn’t understand our language; for it, there are only two values: 0 and 1. These represent the smallest piece of storable information and are known as “bits”. Please note that when I talk about 0 and 1, I’m always using a “representation convenient for us”. In fact, if we were to look inside the memory cells3, we wouldn’t find the characters 0 or 1 written anywhere. What we could observe by delving into this are simply two conditions: the flow of current (1) and the absence of current (0). So, how do we represent the information that accompanies us every day using these two states?

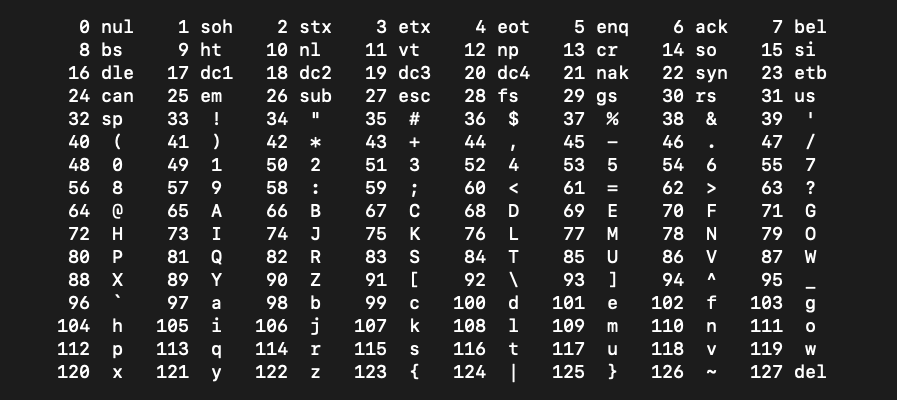

In practice, encoding systems are used. For example, let’s say we want to store the word “hello” in memory. We could define an encoding by associating a number with each character. The most commonly used encodings are ASCII and UNICODE. Below, you can see the ASCII encoding:

According to this encoding, ‘h’ is associated with the number 104, ‘e’ with 101, ‘l’ with 108, and ‘o’ with 111. Thus, according to this encoding, the word “h e l l o” could be represented by this sequence “104 101 108 108 111”. Why do we need to transform words in this way if we said that a computer only understands 0 and 1?

You should know that numbers can be represented under various systems. Let me explain further: the one we’re accustomed to is the decimal numeral system, but it’s also possible to represent any number under the binary numeral system. While the decimal system uses all decimal digits (from 0 to 9), the binary system is based on only two numbers: 0 and 1. This means that with this system, you can write any number using a sequence of 0s and 1s. For example, that series of numbers we saw earlier can be rewritten as “01101000 01100101 01101100 01101100 01101111”. For now, I ask you to take my word for it. In future lessons, when necessary, I’ll explain how this system works in more detail.

What matters for now is that we’ve found a way to store the word “hello” in a format understandable by a computer. This was just an example, but there are different encoding types to store various data types. However, the underlying concept remains the same: we need to find a way to bridge the gap between the “language of computers” and our language, and vice versa.

4. CPU

The CPU, or Central Processing Unit, is the component of a computer capable of performing operations. To complete these operations, it relies on the memories we introduced earlier. We can instruct the CPU on which operation to perform using instructions. Yes, in a way, I’m talking about the same instructions I mentioned earlier. However, there’s a clarification to be made. Earlier, I spoke of instructions like “Display the login screen” or “Wait for the user to enter their password”. The truth is, even though it may seem strange, the CPU can only perform a few basic operations such as addition, subtraction, or comparison operations. Therefore, what might appear as a simple operation like “Check the password, and if it’s correct, allow entry” must be broken down into many elementary operations to be executed in a specific order.

What’s remarkable is the incredible speed at which the CPU can perform these operations. It’s this speed that allows us to achieve complex applications like the ones we use today. However, in general, it’s extremely challenging to build a program using the processor’s instructions directly. This is precisely why we use so-called “programming languages”. The act of using these languages to create an application is called “programming”.

5. Programming Languages

A programming language provides a higher-level abstraction compared to CPU instructions, making it more accessible for programmers to write and understand programs. Of course, the computer’s architecture remains the same as I just presented to you: the CPU cannot directly comprehend the instructions of these languages. This is precisely why there’s a need for other programs like compilers, which are responsible for taking such language and translating it into instructions supported by the CPU.

There are different possible levels of abstraction. There are low-level languages (e.g. Assembly) whose logic closely resembles CPU instructions. These are languages where you can have full control over the underlying hardware. However, when working at these levels, designing a large application remains challenging. It requires reasoning in terms of elementary operations, a detailed understanding of CPU architecture, and deep knowledge of memory and all the components that can be leveraged in a computer. It’s highly technical and error-prone work. This is why programming in these languages is often reserved for specific scenarios.

Most developers, on the other hand, work with high-level programming languages. A high-level programming language is designed to be understandable and more easily manageable by humans. These languages offer a high level of abstraction compared to machine instructions, meaning they are closer to human language and less detailed in terms of the computer’s internal workings. These languages are designed to simplify the software development process, allowing programmers to focus on the logic and structure of the program rather than the intricacies of the underlying hardware.

The first language I recommend you study is C. It is one of the most significant programming languages. It has been widely used for many decades and continues to be used today. Learning C provides a solid foundation for understanding other programming languages. It’s a medium-low-level language, which means it provides enough control over the computer’s hardware resources without reaching the complexity levels of low-level languages. This makes it powerful and fast, although it does require attention from the programmer to manage memory and resources appropriately. Starting to program at this level of complexity will help you understand the inner workings of a computer without overly complicating things.

As time goes on, in various courses, I will delve into all the major programming languages. Each course will be divided into several lessons, and between lessons, I will post previews on my Instagram profile. From these previews, you can already learn some of the topics that will then be thoroughly explored in the final lessons I will publish.

1 Hardware is the collection of all the physical components that make up a computer (CPU, memory, etc.). ↩

2 The term “software” generally refers to the collection of all programs (applications, operating systems, etc.) that can run on its hardware. In some contexts, the word “software” is also used to refer to a single program. ↩

3 The term “memory cells” refers to the individual data storage elements found in memory devices. Each memory cell can store a single bit of information, which can be a binary value of 0 or 1. ↩